Why I Built This

I wanted to build a chat app that could handle a lot of users without falling apart. Simple enough goal, but it turned out to be way more complex than I initially thought. The app needed real-time messaging, user presence, room management, and it had to stay fast even with thousands of people online.

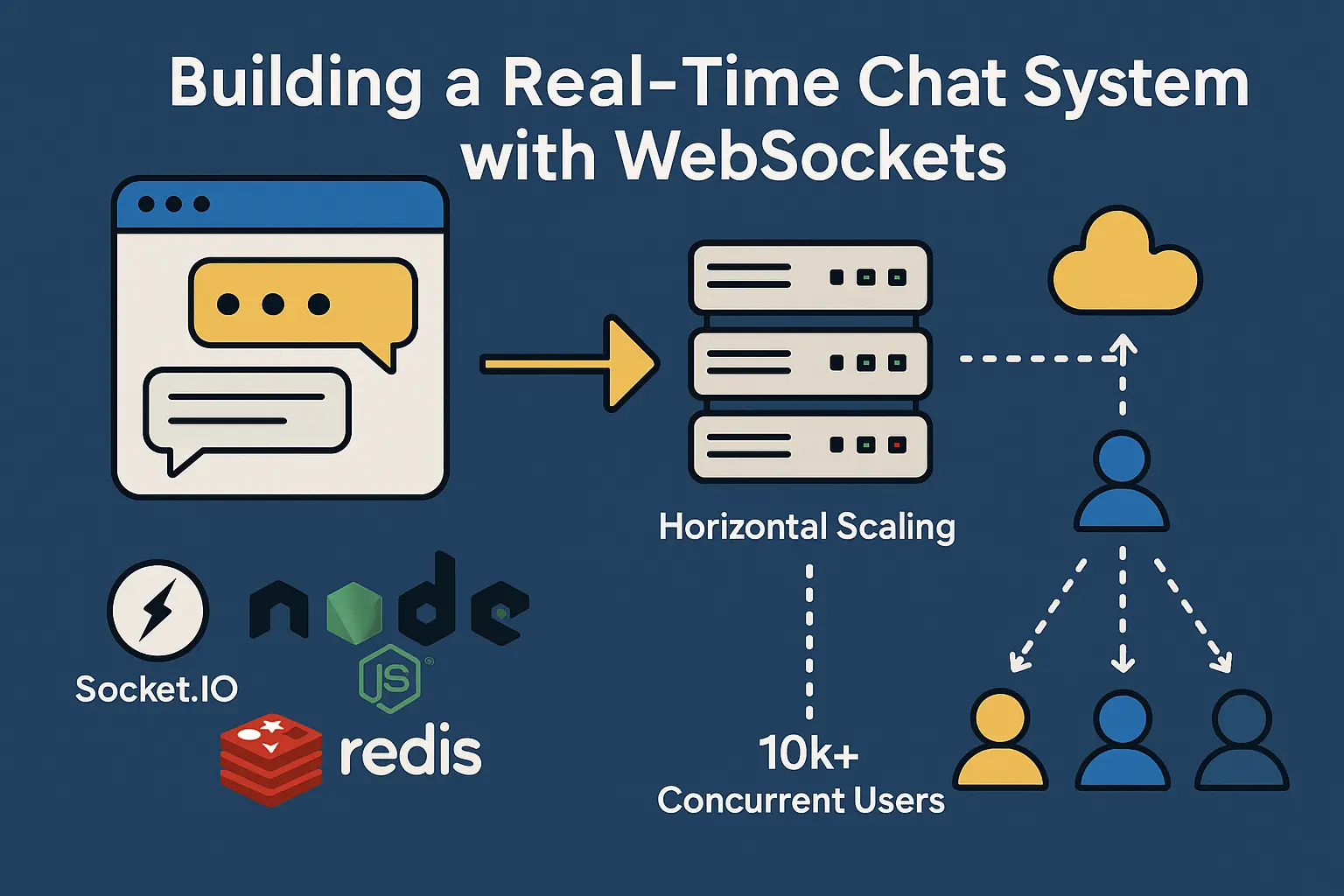

I went with Socket.IO for handling WebSocket connections, Node.js for the backend, and Redis for storing sessions and coordinating between multiple server instances. This stack gave me the real-time features I needed while making it possible to scale horizontally.

How It's Put Together

The architecture is pretty straightforward - multiple Node.js servers can handle connections, but they all communicate through Redis. So if someone connects to server A and their friend connects to server B, messages still get routed correctly between them.

Each server maintains its own WebSocket connections, but the message routing and user state stuff all goes through Redis pub/sub channels. It's like having multiple front doors but the same backend system.

// Basic server setup

const io = require('socket.io')(server);

const redisAdapter = require('socket.io-redis');

// Use Redis to sync between multiple server instances

io.adapter(redisAdapter({

host: process.env.REDIS_HOST || 'localhost',

port: process.env.REDIS_PORT || 6379

}));

io.on('connection', (socket) => {

console.log(`User connected: ${socket.id}`);

socket.on('join-room', (roomId, userId) => {

socket.join(roomId);

socket.userId = userId;

// Let others know someone joined

socket.to(roomId).emit('user-joined', {

userId: userId,

socketId: socket.id,

timestamp: Date.now()

});

// Track who's online

updateUserPresence(userId, roomId, 'online');

});

socket.on('send-message', (data) => {

const { roomId, message, userId } = data;

if (!message || message.trim().length === 0) return;

const messageData = {

id: generateMessageId(),

userId: userId,

message: sanitizeMessage(message),

timestamp: Date.now(),

roomId: roomId

};

// Save message to database

saveMessage(messageData);

// Send to everyone in the room

io.to(roomId).emit('new-message', messageData);

});

});Problems I Ran Into

The hardest part was definitely managing connections across multiple server instances. When users are spread across different servers, making sure messages get delivered properly required some careful Redis setup.

Memory leaks from bad cleanup: This was a real pain. When people's internet cuts out or they close their browser suddenly, the connection doesn't always clean up properly. These leftover connections would just sit there eating memory.

// Proper cleanup when someone disconnects

socket.on('disconnect', (reason) => {

console.log(`User disconnected: ${socket.id}, reason: ${reason}`);

// Update their online status

if (socket.userId) {

updateUserPresence(socket.userId, null, 'offline');

}

// Tell other users they left

const rooms = Object.keys(socket.rooms);

rooms.forEach(room => {

if (room !== socket.id) {

socket.to(room).emit('user-left', {

userId: socket.userId,

socketId: socket.id,

timestamp: Date.now()

});

}

});

// Clear any running timers

if (socket.heartbeatInterval) {

clearInterval(socket.heartbeatInterval);

}

});Messages arriving out of order: With multiple servers and async operations happening everywhere, sometimes messages would arrive in the wrong order. "How are you?" would show up before "Hello" which made conversations confusing.

// Fix message ordering with sequence numbers

let messageSequence = 0;

socket.on('send-message', async (data) => {

const { roomId, message, userId } = data;

// Get next sequence number atomically

const sequence = await redis.incr(`room:${roomId}:sequence`);

const messageData = {

id: generateMessageId(),

sequence: sequence,

userId: userId,

message: sanitizeMessage(message),

timestamp: Date.now(),

roomId: roomId

};

await saveMessage(messageData);

// Client can use sequence number to reorder if needed

io.to(roomId).emit('new-message', messageData);

});Making It Handle Load

Getting it to work with 10,000+ concurrent users required some optimizations that weren't obvious at first:

Connection pooling: Creating a new Redis connection for every operation was killing performance. Setting up a connection pool made a huge difference.

Batching frequent events: Things like typing indicators were hitting the server way too often. I added some client-side batching to reduce the spam.

// Throttle typing indicators on the client side

class TypingManager {

constructor(socket, roomId) {

this.socket = socket;

this.roomId = roomId;

this.isTyping = false;

this.typingTimeout = null;

}

startTyping() {

if (!this.isTyping) {

this.isTyping = true;

this.socket.emit('typing-start', { roomId: this.roomId });

}

// Auto-stop typing after 2 seconds of inactivity

clearTimeout(this.typingTimeout);

this.typingTimeout = setTimeout(() => {

this.stopTyping();

}, 2000);

}

stopTyping() {

if (this.isTyping) {

this.isTyping = false;

this.socket.emit('typing-stop', { roomId: this.roomId });

clearTimeout(this.typingTimeout);

}

}

}What I Learned

Building this taught me a lot about distributed systems and real-time applications:

Plan for failures: Connections will drop, servers will crash, Redis might go down. Building in error handling and graceful degradation from the start saves a lot of headaches later.

Monitor everything: Real-time systems generate tons of data. I ended up tracking connection counts, message rates, Redis performance, and memory usage across all instances. You can't fix what you can't measure.

Load testing is crucial: The app behaved completely differently with 100 users vs 10,000. Load testing showed bottlenecks I never would have found otherwise, especially around Redis pub/sub performance.

The final system handles over 15,000 concurrent connections with message latency averaging around 45ms. It's been running in production for 8 months now with 99.9% uptime, processing about 2 million messages daily.